The overall performance of any company is linked to its ability to perform a task with a limited percentage of non-performance in its execution. The non-performance if not corrected will generate wastes and loss of time, loss of revenues and loss of reputation.

An effective solution here is certainly the deployment of a continuous improvement process which is reliable, repeatable and measurable. Such process should allow to trigger corrective actions based on an accurate and reliable measurement process. The ultimate goal is certainly to reduce wastes or eliminate any loss of production.

The most common method is the implementation of a four steps strategy, including Plan-Do-Check-Act (PDCA) and named Deming’s cycle. Such cycle allows to define a corrective action plan and to redefine any corrective action which not meet the curative plan defined, in order to eliminate all defects.

A significant evolution is certainly the six-sigma method, including five step such as Define-Measure-Analyse-Improve-Control (DMAIC). This methodology places a significant focus upon the measurement and definition of a Measurement System Analysis (MSA).

The latest methodology, developed by the Japanese industry in the 80s’ and named Lean methodology is basically based on the concept of perception of the quality. The methodology means the collection of a large number of data from a reliable measurement system and the work to be carried out here is to identify the possible variations from the expected result.

The lean methodology will focus on definition of a panel of acceptable results and the segregation of all the non-acceptable variations seen as non-conformance here.

The lean process is very centred on a statistical data analysis process defining a cloud of scenario based on the perception of quality form the end user targeted (persona). Such process allows to reduce the production cost by limiting the quantity of wastes and to include less stringent criteria on quality measured.

Deploying such methodology in the definition of a company Quality plan, will require to identify, write and evaluate all procedures of a company internal processes, which is part of the ISO9001:2000 certification.

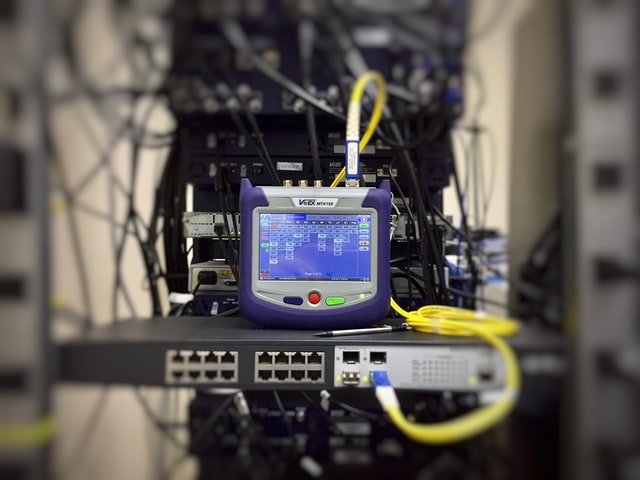

Once the processes identified, their measurement and evaluation require to have a tool allowing to measure a result and to identify a variation from a result expected. For example, a variation in a dimension, a variation of temperature or variation of volume are dimensions to be measured. These dimensions are always based on references named “etalon”

The metrology has risen up in the 80s, with the implementation of Control qualities plan and opened the opportunity to evaluate the result of any predictive and curative actions by the obligation to carry out a measurement process showing the final result obtain versus the result expected.

The idea here was certainly to measure any deviation in the performance of a production site in Quantity (MTBF) and Quality (TQM), versus the downtime imposed by conditional maintenance (MTTR), in order to obtain an optimized Performance (TCO).

An effective metrology strategy is certainly to devise and implement a procedure for the periodical check of the measurement tools available in the companies. Such process is requiring the shipment equipment and utilisation of references, also named “Etalons”

Nowadays with the development of IT tools and the increase of data shared between the production’s sites and the monitoring tools; The metrology process is mainly focusing on the statistical analysis of data received from multiple sensors.

The digitalisation of such process allows to use data from manufacturers about the sensors deviation in time and to estimate what are the acceptable result thru the years and in accordance with the Lean methodology.

Such methodology can easily identify any defect on sensors, because any out of range result received from a sensor would spot a defect and trigger a curative action, based on out of range statistical data.

Moving forward what are the possible integration of the latest technologies to the Metrology in the improvement of multiple production sites monitoring?

The development of IoT technologies and the deployment of faster communications networks are now allowing to transmit in Real-time data on-shore and to allow a central monitoring process.

The situation in the offshore industry is still under development, as data can be transferred when offshore networks are available and such volume of data transfer can be expensive with the utilisation of satellites-based solutions.

The deployment of more cost-effective communication solutions and faster 5G or 6G technologies should allow a fast transfer of date of any production site by any monitoring site around the globe.

The fresh utilisation of Ai and the development of more powerful computers, allows to process a more significant volume of BIG DATA in quasi real time and to anticipate any critical situation by crossing measurement data with manufacturers data.

It is then possible to anticipate any default of production by pre-identify a situation of variation of measurement of temperature, dimension or volume for example and to stop the production process and trigger a curative action just in time.

As an Example the measurement evolution from a PT100 sensor (Temperature), can be anticipated and integrated to a measurement procedure allowing to avoid non quality by comparing criteria such as time, Temperature measured vs Manufacturers data provided.

This combination of Lean methodology with an advance monitoring of the measurement tools and production processes allows to reduce the wastes significantly. Such technologies allow to optimize the production cost and to leave the cost management of the raw materials to the purchasing department.